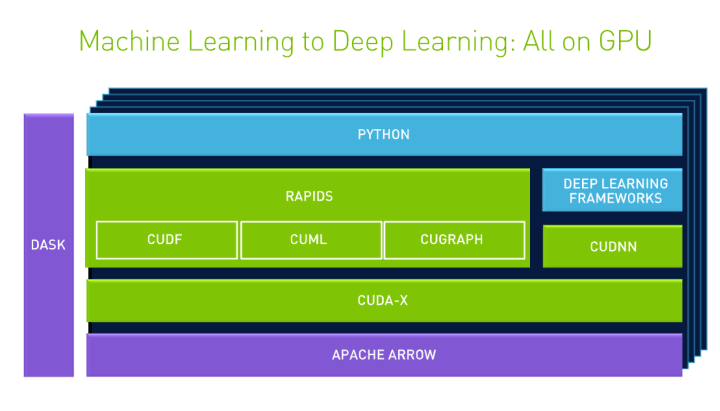

How to use NVIDIA GPUs for Machine Learning with the new Data Science PC from Maingear | by Déborah Mesquita | Towards Data Science

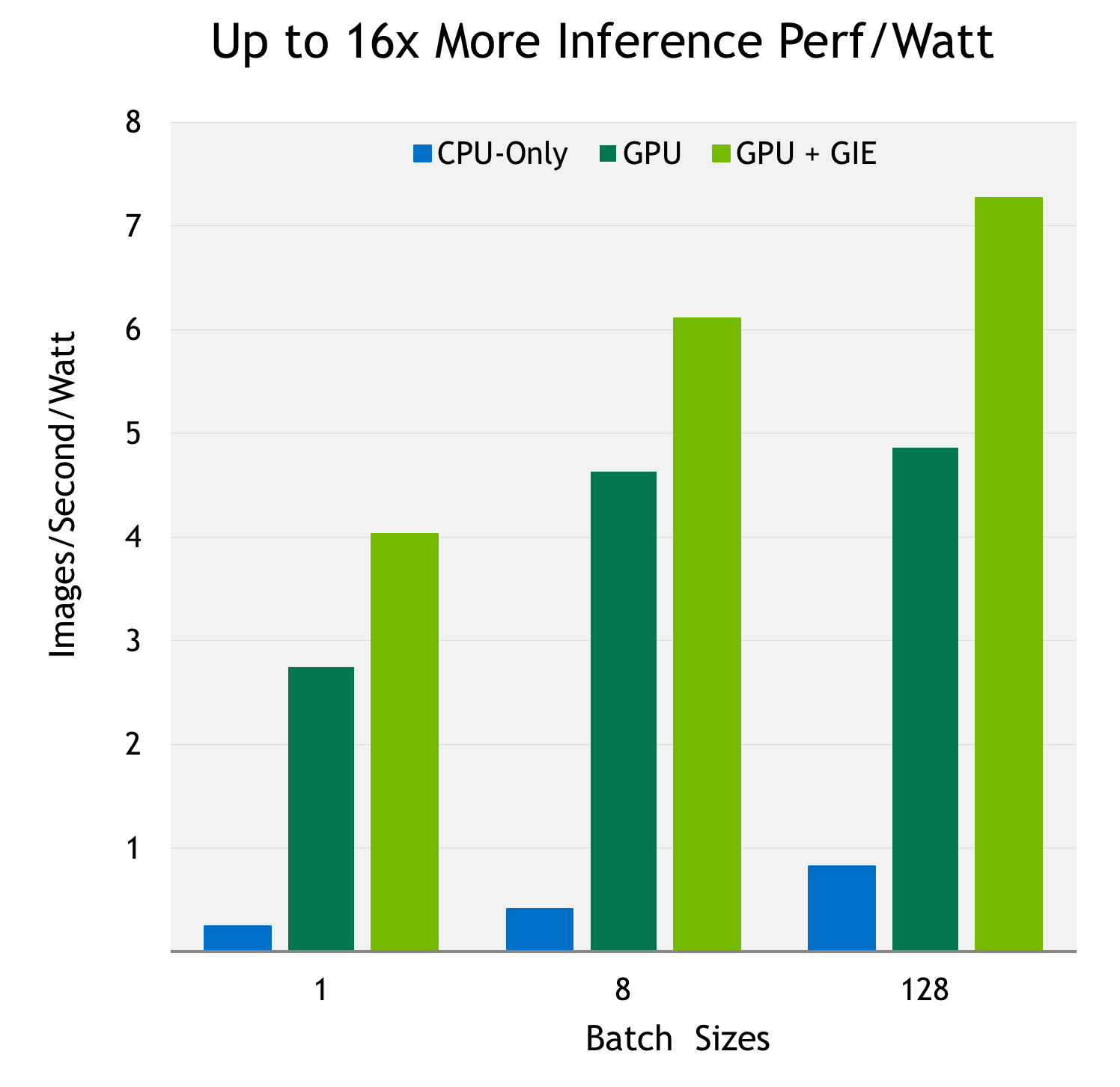

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog